Most language models rely upon a Azure AI material Safety service consisting of the ensemble of types to filter destructive content material from prompts and completions. Each individual of these solutions can obtain support-specific HPKE keys from the KMS following attestation, and use these keys for securing all inter-provider conversation.

It removes the potential risk of exposing non-public data by managing datasets in protected enclaves. The Confidential AI solution gives evidence of execution inside a trustworthy execution natural environment for compliance purposes.

businesses have to have to protect intellectual residence of produced types. With expanding adoption of cloud to host the information and products, privateness risks have compounded.

Cloud AI stability and privacy ensures are difficult to confirm and implement. If a cloud AI support states that it does not log sure user data, there is generally no way for stability researchers to confirm this guarantee — and often no way for the assistance service provider to durably implement it.

The node agent from the VM enforces a plan over deployments that verifies the integrity and transparency of containers launched inside the TEE.

The surge from the dependency on AI for essential features will only be accompanied with the next interest in these facts sets and algorithms by cyber pirates—plus more grievous implications for firms that don’t consider steps to protect them selves.

We paired this components with a new working method: a hardened subset with the foundations of iOS and macOS tailor-made to help big Language Model (LLM) inference workloads while presenting an extremely narrow attack surface. This enables us to make the most of iOS security technologies which include Code Signing and sandboxing.

No unauthorized entities can see or modify the info and AI application during execution. This shields equally sensitive client facts and AI intellectual residence.

Fortanix C-AI makes it uncomplicated to get a model service provider to protected their intellectual house by publishing the algorithm in the secure enclave. The cloud supplier insider gets no visibility in the algorithms.

just about every production personal Cloud Compute software picture will probably be revealed for impartial binary inspection — such as the OS, programs, and all pertinent executables, which scientists can verify versus the measurements during the transparency log.

The likely of AI and facts analytics in augmenting business, methods, and providers advancement by information-pushed innovation is famous—justifying the skyrocketing AI adoption over the years.

In case the technique continues to be made well, the end users would've higher assurance that neither OpenAI (the company driving ChatGPT) nor Azure (the infrastructure provider for ChatGPT) could accessibility check here their data. This could handle a typical issue that enterprises have with SaaS-style AI apps like ChatGPT.

usage of confidential computing in several levels ensures that the information could be processed, and models can be formulated even though trying to keep the information confidential even though even though in use.

Fortanix Confidential AI has become exclusively designed to deal with the exclusive privacy and compliance prerequisites of regulated industries, along with the need to protect the intellectual home of AI types.

Mason Gamble Then & Now!

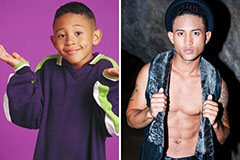

Mason Gamble Then & Now! Tahj Mowry Then & Now!

Tahj Mowry Then & Now! Barbi Benton Then & Now!

Barbi Benton Then & Now! Marcus Jordan Then & Now!

Marcus Jordan Then & Now! Dawn Wells Then & Now!

Dawn Wells Then & Now!